The problem: Rate-limiting and throttling

For one of our recent projects we ran into the following: the application was scaling quickly, and almost all of its external and long-running processes are handled with Laravel Horizon. This worked quite well until we started running into rate-limiting issues due to these jobs connecting with many external API’s. For some API’s we could ignore rate-limits, for some we had a predefined number of requests per X seconds, and for some we had a predefined number of requests per X seconds, per user. Apart from that, for our case, it is crucial that one user’s jobs are run in sync – not parallel, and the timing of our jobs is crucial as well (should be near real-time).

If you’ve used Laravel Horizon before you have probably looked into job throttling using Redis::throttle, or Laravel’s premade WithoutOverlapping job middleware. Neither of these solved our case properly. Since we were throttling/avoiding overlaps for many different API’s, for many different users these made us run into two issues:

- Simply throttling an X amount of jobs per Y seconds, per API, per user would not work since many API’s use different rate-limiting algorithms. The leaky bucket algorithm is our biggest bottleneck here (especially if the bucket has limits per second, minute, hour and day). As far as I know there is no built-in fix for this from Laravel (if there is please let me know!)

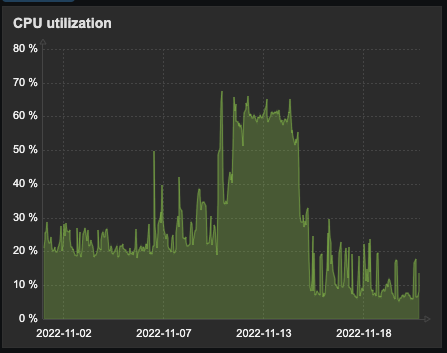

- Setting up WithoutOverlapping made our servers go haywire. After looking into the source code, it appears that every time the delay from a job being released has passed, it is instantiated again. The result is that, when running as many jobs as we are for our project, Laravel is instantiating and then re-queueing thousands upon thousands of jobs every second. Now Redis can handle a lot, but this was not a desirable case for us.

As a result of all this, either users were receiving incorrect data, jobs were going missing, our server resources were being exhausted, or the API connections were running (much) slower than required (and the client was not happy).

Server CPU usage while using WithoutOverlapping – 10th until 15th of november (sad server noises)

Simply increasing the server(s) CPU specs/launching more (load balanced) servers until infinity is not desirable.

Custom throttling middleware

Our idea was to use a custom throttling middleware, which would take into account:

- Which API and which user we were performing requests for

- Keeping track of how many requests were being done, and how many were remaining (in the leaky bucket)

- Delaying jobs until the moment we know the job can run successfully

After implementing this we quickly realized this was our next nightmare. We would have a supervisor per API, and a queue per group of users, defined by what their user role is. By default, if you would assign a queue of let’s say 100 workers, Laravel will start processing jobs in parallel, up to 100 at a time – which is great in essence!

Although when your case is that you want to run at most 1 job at a time per user, per API, with custom throttling logic, we run into the same problem as before. For example, 10 jobs could dispatch at the same time for one user, each going through our custom throttling logic, reserving requests to be handled later, and then all of those 10 jobs would fail because none of them could get access to the amount of resources required.

Setting up dynamic queues per API and user

Setting up dynamic queues was our next idea – let’s have 1 queue per user. This way, we can ensure jobs are being handled in sync and user’s and their API connections won’t get into each other’s ways. So we forked Laravel Horizon, made sure queues were being dynamically generated, and made Horizon reload these queues each time we defined a user as needing a queue (user has status approved, has API credentials verified, other setup done).

Nice.

Everything worked as I expected – perfectly. For a while. Except for when the application started getting more and more users. The server load this generated and the spikes this would have every time a user set their API credentials and Horizon reloaded all queues, were no good.

Laravel queues can auto-scale, but, by default they have at least 1 worker. Each worker reserves some RAM. Now when stopping and starting hundreds of queues every few minutes we once again have a situation which is no good. Asides from that, this means we would have hundreds of queues (and workers) doing absolutely nothing at all until jobs would be queued. Once again, simply increasing the server(s) RAM/scaling horizontally until infinity is not desirable.

Existing solutions

You might think, but there must be existing solutions? This was my thought as well. I’ve Googled and Googled, but it appears that either

- Nobody has had (and solved) this problem

- The solutions are not (officially) supported

We like having supported (official) packages, with continuous support. If not official, at least (very) popular so we are guaranteed we can keep using it for the foreseeable future.

I did not find any.

If any of you solved the dynamic queues/rate-limiting issue, I’d be very curious to hear!

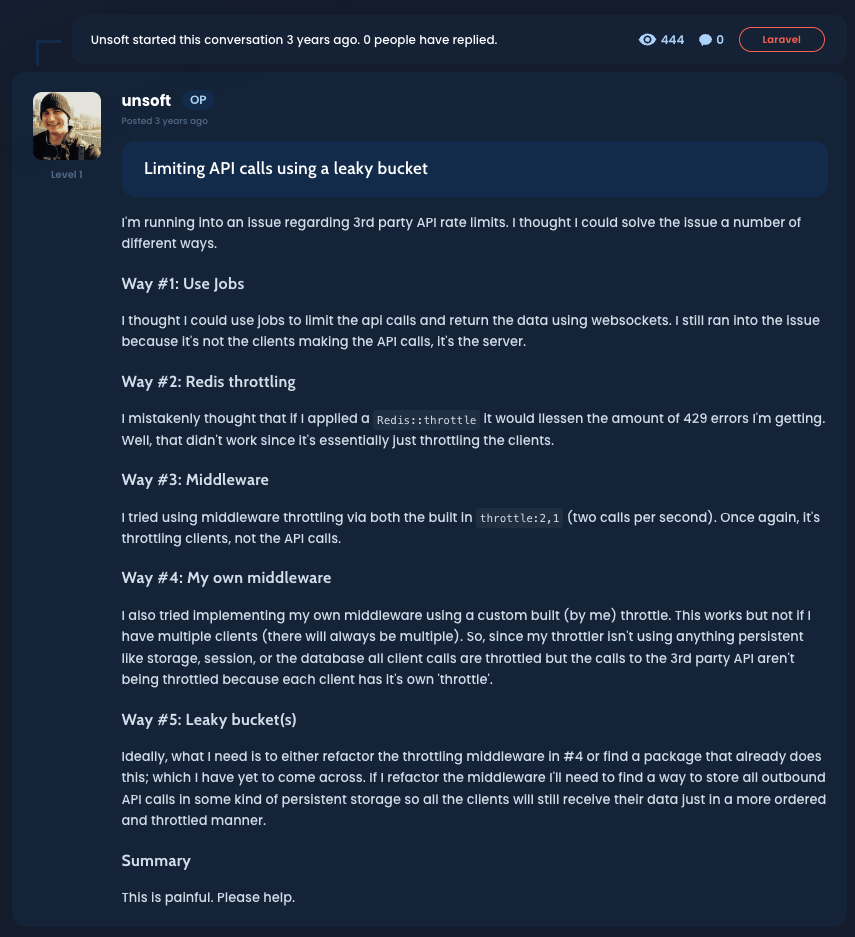

Case in point: I found this Googling while writing this post. 3 years ago, 444 views, 0 responses (sad developer noises)

So, how do we fix it?

Time to throw everything we know out of the window. No more searching for existing solutions, no more Laravel middlewares or Redis throttles, time to go another way. The way we solved it was to once again dive into the Horizon source code, and completely modify its autoscaling. Now, we run 1 supervisor per external API and user group, and just 1 queue per supervisor – this is done purely through the default Laravel Horizon configuration. We then changed Laravel’s premade autoscaling feature to not be based on the amount of jobs that are pending, but the amount of jobs grouped per user. We assign each job a unique key, which defines which API and user it belongs to. Every 10 seconds, we check all pending jobs to extract how many workers we need per queue based on the API/user combination. Then Laravel takes care of the rest to scale the queue’s workers to this amount.

This way, we can ensure that:

- Each queue will manage its own rate-limiting as to not get jobs conflicting with one another

- Only API’s/users that need a worker active, have a worker active

- At most 1 worker will be active for each user at all times

- We won’t have hundreds of queues and workers doing nothing (and reserving server resources)

Within the application itself, all that’s needed is using the tags() method within your jobs to define a unique tag per API/user. Apart from that, to solve the rate-limiting issue, we still use our custom throttling middleware, but this would be a blog post on its own and this one is more about Laravel Horizon autoscaling and server resources.

In essence: we keep track of how many requests a job will perform and reserve them if that many requests are available, based on API responses. If interested send me an email and I’d be happy to tell you more about it.

Finally, this set-up has been running smoothly for us now for about a month. I’m quite happy to have (potentially) solved an issue for which nobody else has yet (publically) shared their fix, and that I may help other developers with this, but I can imagine this solution is still not perfect. Once again, any feedback is welcome.