Locking: it can be either your biggest friend, or your worst enemy.

The situation

Imagine the following situation: you have implemented an endpoint, which you have registered to some external platform as a receiving endpoint for webhooks. This endpoint may for example receive order data, such as the recipient, the billing address, and all related order information (products, weights, prices, you name it).

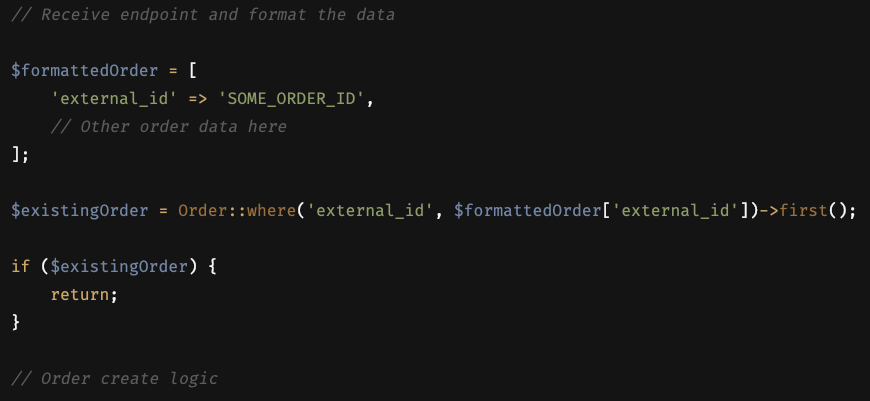

Now when you want to process this order data, you can imagine there will need to be some logic. First, we format the data to a uniform structure with which we can handle all successive logic. Then, we will want to check whether the order already exists within our database. We can of course check if we have already saved the order before, by checking on the identifier that the external platform uses. If we simply perform the following:

… you may start to see the issue. These external platforms send us webhooks for just about anything you can imagine. Order initialized, order created, order paid, shipping address updated, order completed, which is good! Sometimes, these events can follow each other up so quickly that they happen within the same second, or even within the same few milliseconds. Uh-oh. Now you can imagine that while we are doing this query, and we receive another webhook, another one of those webhooks was already in the flow of being processed. This query will then say “nope, doesn’t exist yet” and the order will be created as a duplicate within our system, since neither of the two webhooks recognized the external order ID.

This is a real-life situation we have encountered many times while implementing integrations with big cloud e-commerce platforms such as Shopify and Lightspeed, but it is applicable to many other real-life situations outside of e-commerce (or webhooks, for that matter).

So, how do we fix it?

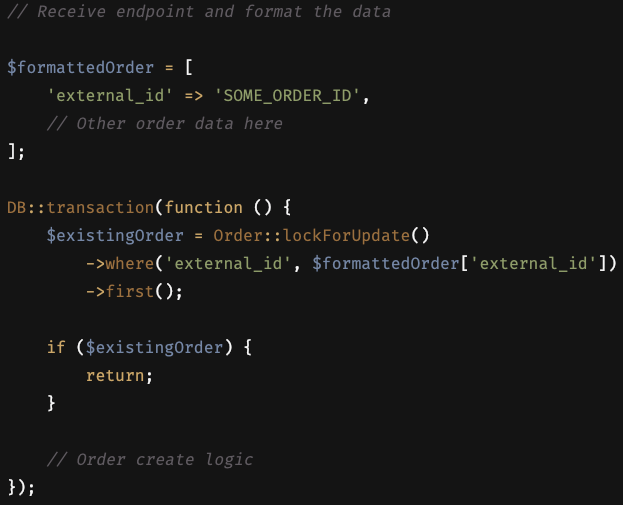

The first thought you might have (like we had) is database locking. Just give it a DB::transaction and YourModel::lockForUpdate as follows:

This logic will process the first webhook, and while the code is running, tell the next one “get in line please”. The next call will be told “get in line please”, and each call will be handled one-by-one. Problem solved, right? No more duplicate orders!

If you have run into this problem before, you will immediately notice what will happen here. What if we receive 20 webhooks at once for the same order? What if we need to be processing thousands of webhook calls in an hour, let alone within a minute? The “line” of calls waiting to be processed will be so long, that you run into race conditions, where in the end, every call will get a timeout from your webserver, and most likely the database will be timing out the calls as well.

Not only will you not be getting any duplicate orders this way, you won’t be getting any order data at all. So, what is the solution…?

Our saviour: Atomic Locks

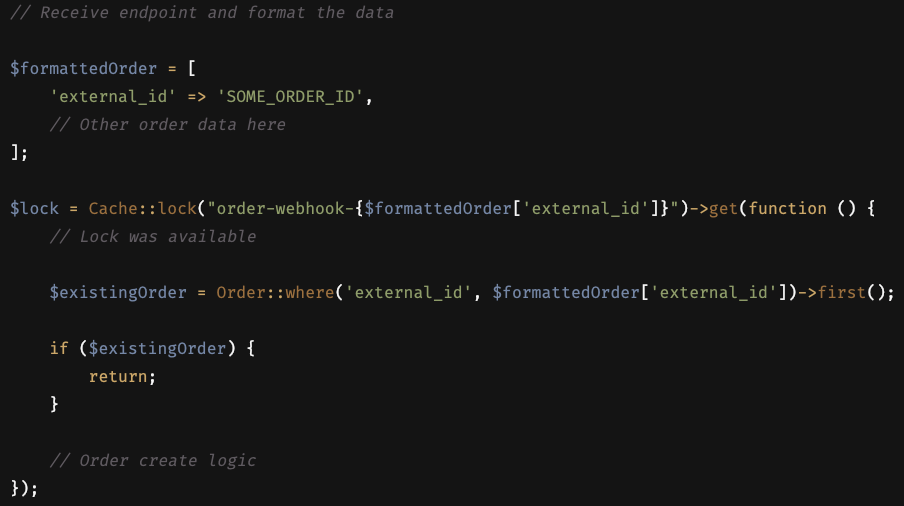

From Laravel 7 onwards, there is a feature named “Atomic Locks”. This integrates with your existing cache driver – though I would strongly recommend using a fast one. Laravel provides built-in support for Redis, which perfectly suits our needs (it’s quick!).

In this way, we have a way of defining a lock, or better said, many locks, defined by a key. This is perfect for our scenario, since our requirement is that orders are always unique based on their external order ID.

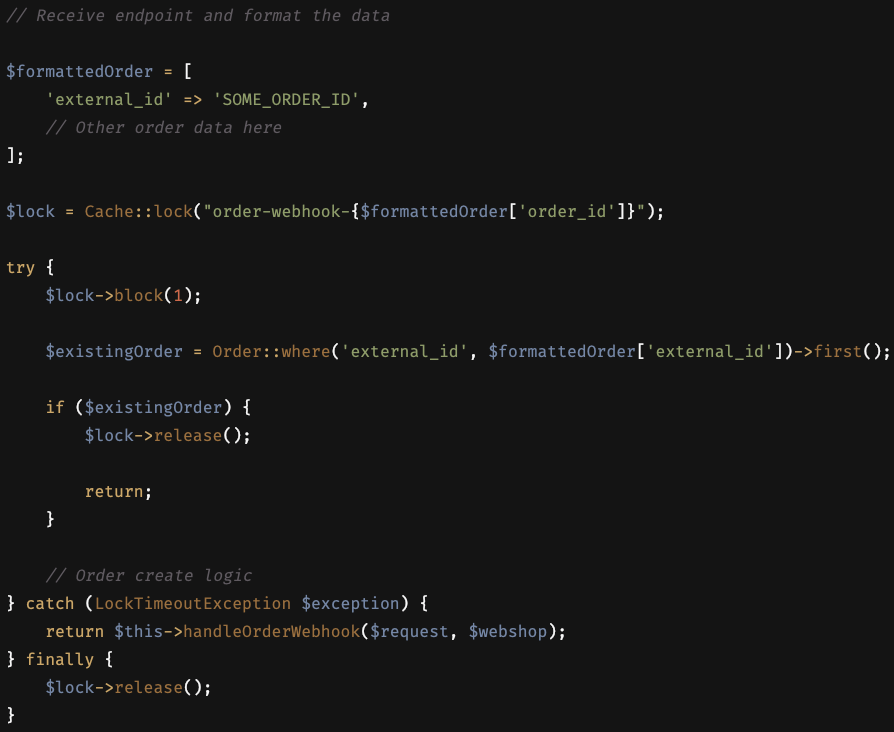

Consider the following example:

We’re almost there now. The database will not be locking (and thus, not generating a queue). Instead we are creating a unique lock in Redis for this specific order ID, and other order ID webhooks will pass through without issue.

Now there is one step remaining. What if a second webhook call arrives for the same order, while we didn’t want to process the first, and the second was the one that is actually relevant? In this case the second webhook will get an error, since it was not able to acquire the lock.

The fix is to hold the lock for as long as your logic needs (in our example I will use just 1 second). If it could not get a lock, it will try again after a second. If the lock was successfully acquired, we can process the order, and after that release the lock:

Done!

Now the only remaining threats are when your logic is taking longer than a second, which may be solved by the recursive try catch logic shown above, or your configured timeout (usually 60 seconds for webservers like Nginx or Apache) is hit (after 60 tries of 1 second), by for example not running your external processes in async fashion using jobs. Other than that, we are now able to process 20 webhooks within the same second for one order (they will have their own “queue line”), as well as 1000 unique order ID’s at once. Boom.